Stefan Deuchler

Human Computer Interaction

CEP (Candidate Experience Platform) implemented on client's portal

Project & Role

Semester-long group project

UX Researcher & Usability test moderator

Client

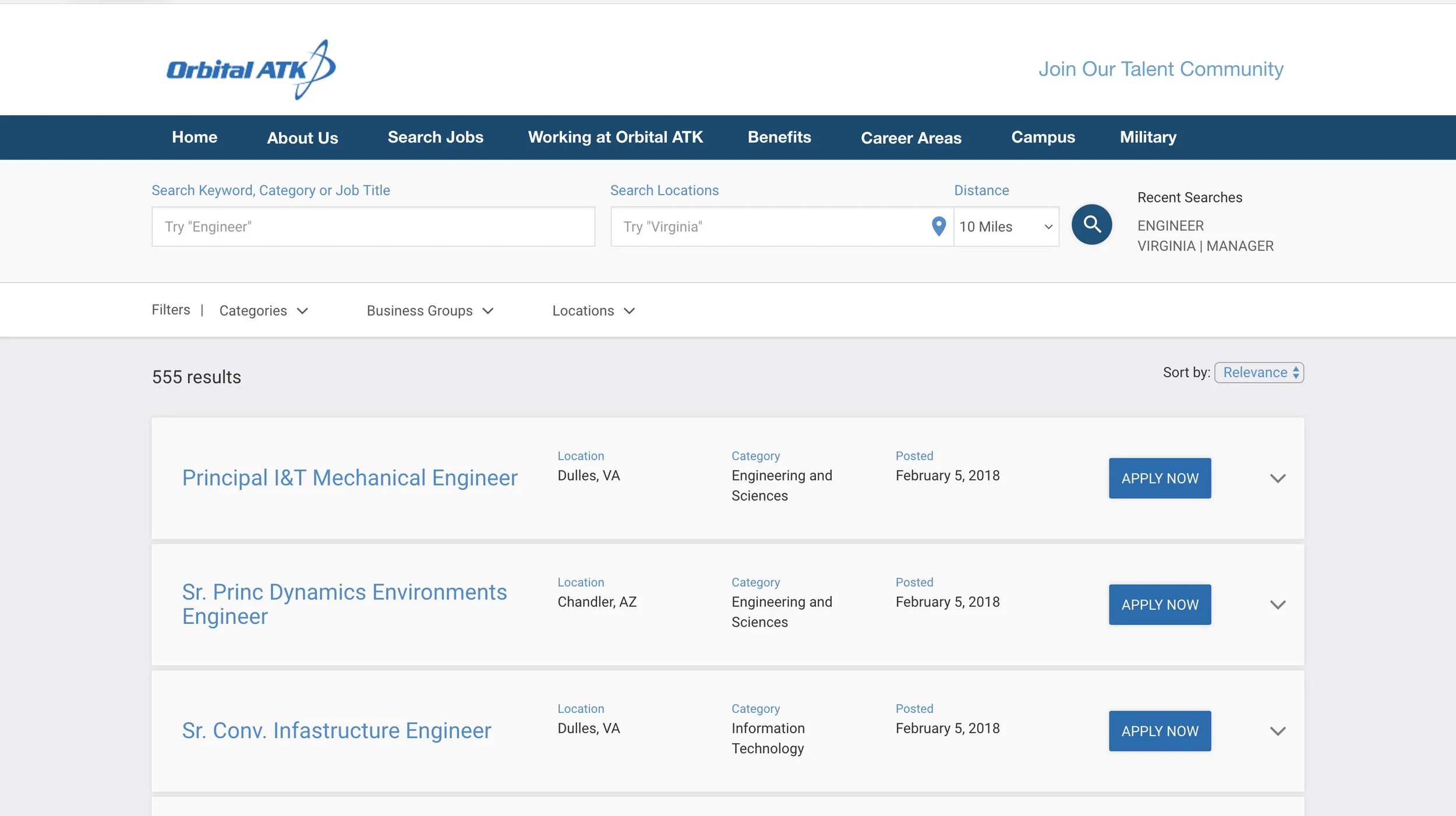

Jibe is a company that develops a job search and application platform (Candidate Experience Platform). We were asked to evaluate the platform as it was implemented on the website of Orbital ATK, an aerospace and defense technologies corporation. Our goal was to uncover current weaknesses and shortcomings and to provide recommendations on how to improve the site.

Stakeholder Interview

We first interviewed two representatives of our client to understand Jibe's business goals in general and specifically for their Candidate Experience Platform. We also wanted to make sure we understood the clients expectations for our work and to establish a common understanding regarding our deliverables and agree on specific delivery dates to avoid misunderstandings later on.

User Interviews

We conducted a total of four one-on-one interviews with potential users of Jibe's Candidate Experience Platform with the goal to answer the following research questions:

- Where are users of the site coming from?

- What are factors that keep users on the site?

- What drives users away from the site?

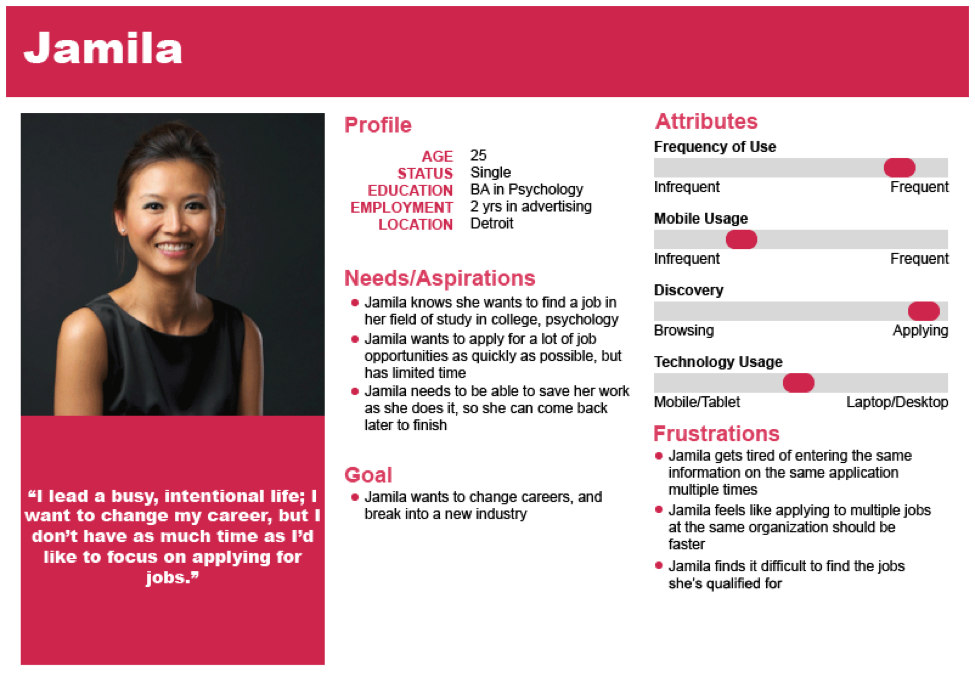

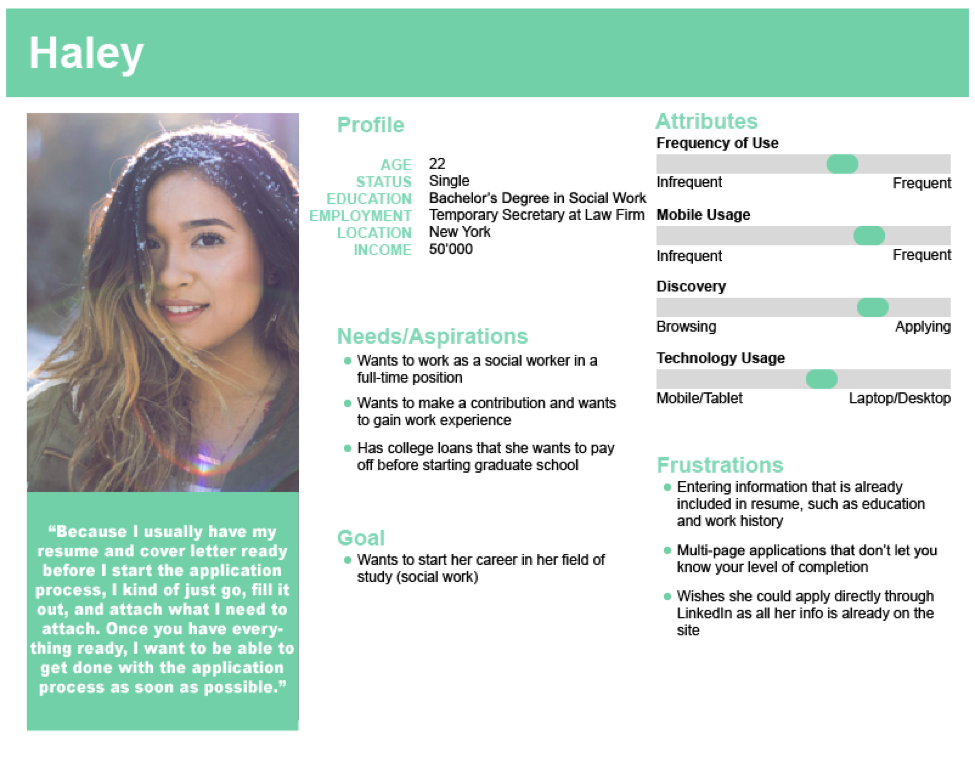

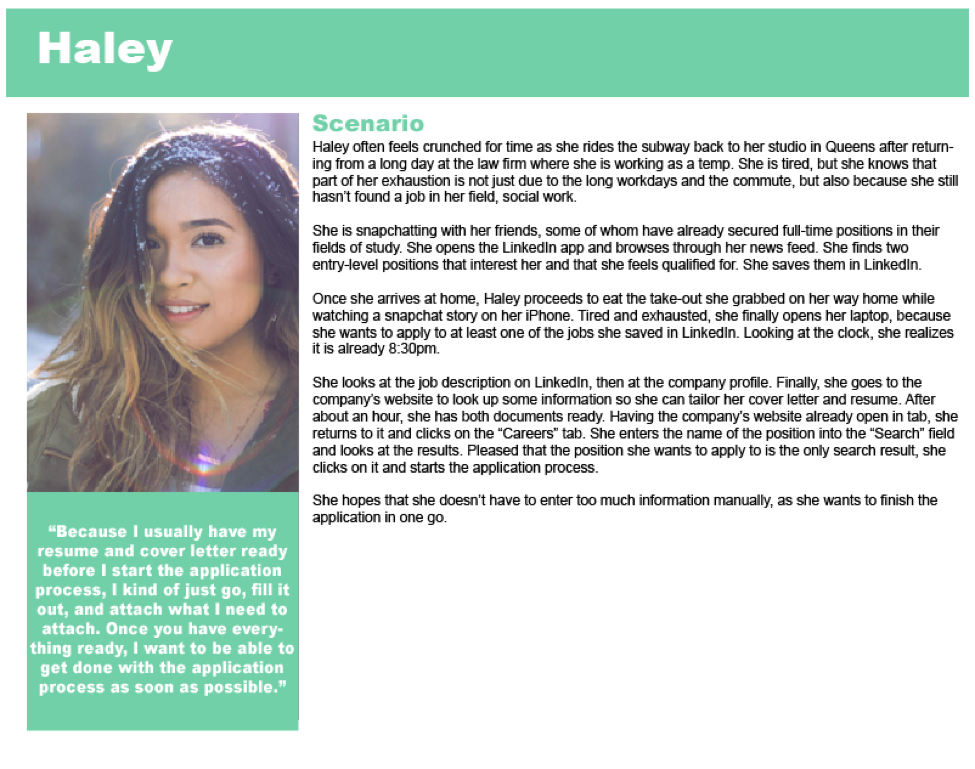

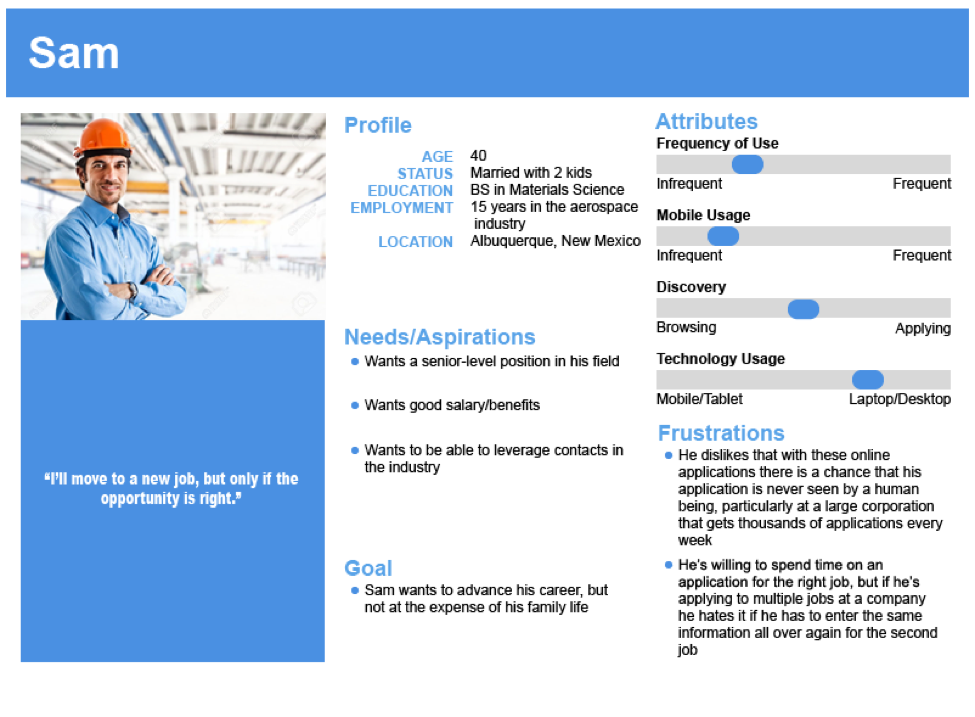

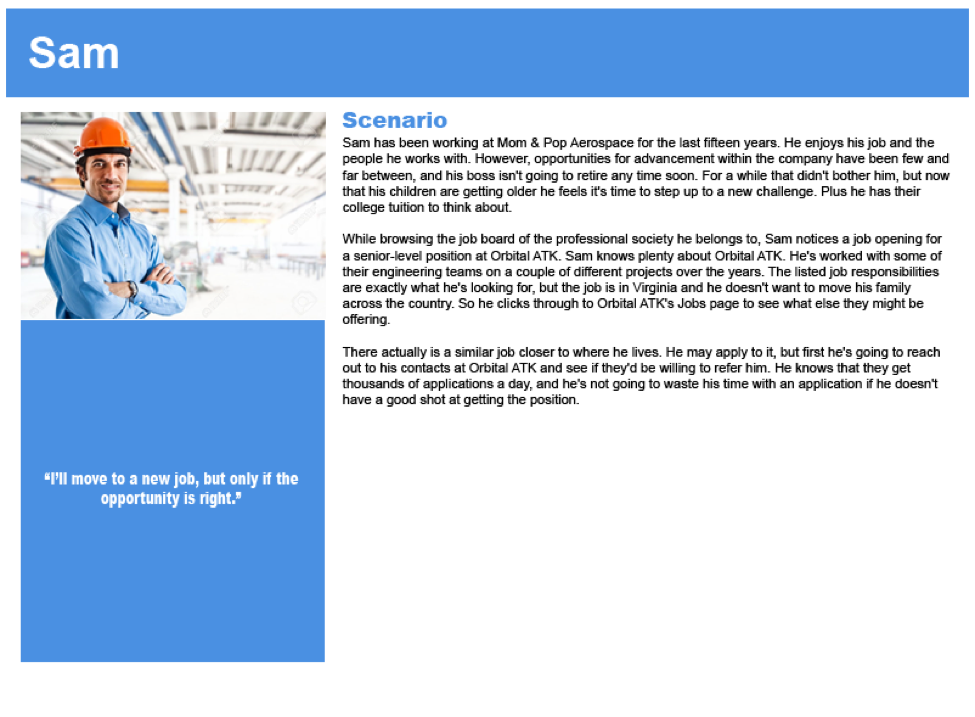

Personas

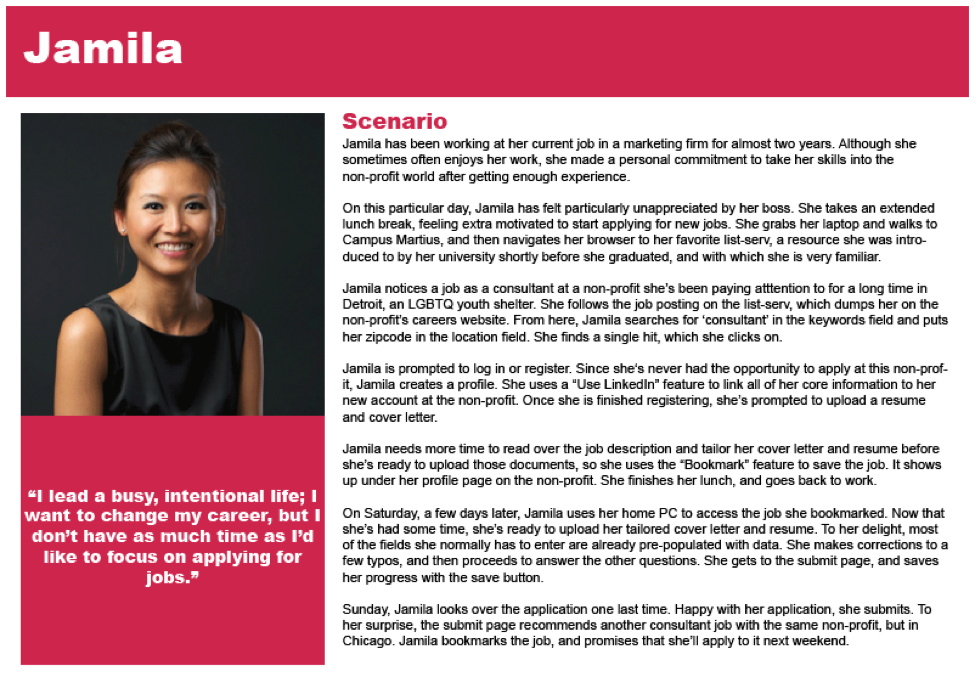

After transcribing the interviews, we conducted interpretation sessions as a group and coded the data. We then created a set of 3 personas to communicate the findings from our interviews. We also added scenarios to the personas to make describe their unique approach to the job search. Rather than synthesizing from a large pool of interviewees, our small group of interviewees allowed us to create personas that reflect a single individual, with personally identifiable details changed to protect their privacy (name, picture and location).

Comparative Evaluation

To better understand the differences between Jibe and its comparators, including direct, indirect, and partial comparators, we first generated a list of common features among comparators, then we identified which features among comparators might be effective in helping Jibe achieve its goals. Our evaluation was guided by the following two research questions:

- What are the differences between Jibe and its comparators

- Based on the differences, what features and design elements can Jibe implement or eliminate to improve users’ experiences?

Comparison matrix for Search features

Comparison matrix for Apply features

Surveys

One of the main goals of our client was to improve the quality of the job applicant pool. As the majority of the jobs that are advertised on the Orbital ATK site are STEM-related, we wanted to find out if there are any specific requirements of applicants that have a STEM degree. We used the following research questions to guide our survey design:

- In what ways do the needs and behaviors of applicants with a STEM background differ from the rest of the general applicant population?

- What job discovery methods do job applicants use and which ones prove to be most successful?

- What technology are job applicants using, specifically, how important is a mobile representation of the website?

- What are the chief frustrations job applicants encountered during their most recent job search?

Survey Design

We designed the survey so it could be completed in five minutes or less in order to attract as many potential respondents as possible and to maximize completion percentage. We brainstormed and recorded approximately 30 possible survey questions before settling on a 2-page, 13-question format via Google Forms.

We tested the survey with five potential respondents (whose responses were not included in the final data set) for clarity, length, and potential pitfalls. After adjusting the survey with their feedback in mind, we deployed the survey via Facebook, which we chose for its broad range of user types.

Survey Results & Findings

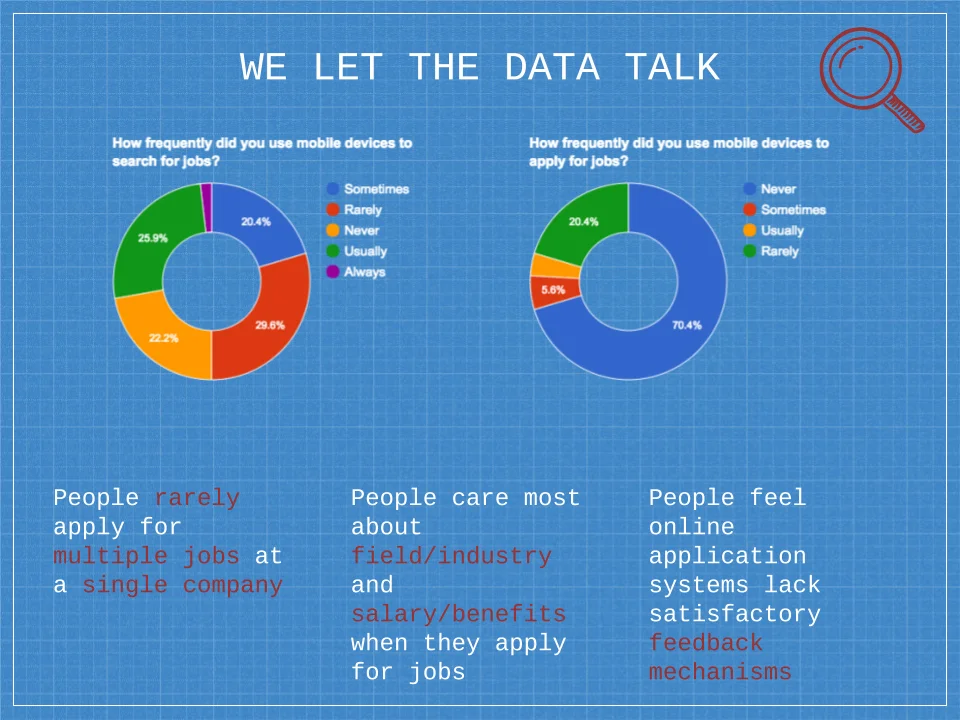

The results of our survey indicated that people rarely apply for jobs on mobile devices or apply for multiple jobs at a single company. They also suggest that field/industry and salary/benefits are the most important factors for people when they are looking for jobs, and that they would appreciate better feedback mechanisms from online application systems.

Based on these findings, we recommended that the mobile platform be optimized for search, that the system should recommend relevant jobs to users, that default search results should be sorted by field or industry, and that the system provide better post-application feedback.

Recommendations based on Heuristic Evaluation

Heuristic Evaluation

To evaluate Jibe's Candidate Experience Platform according to Nielsen's 10 Heuristics, we first identified the heuristic evaluation criteria that we felt were most salient for our client's site. We then created a path through the site and evaluated the journey as a group. We then each executed an individual, in-depth evaluation of the pages along the defined journey.

Our goal was to answer the following research questions using Heuristic Evaluation:

- Which aspects of the Orbital ATK online application platform are functioning well already?

- Which aspects of the Orbital ATK online application platform are not good enough and can be improved?

Usability Testing

Apart from the in-person interviews, this was the part of our project that I enjoyed most. Having users perform a set of tasks is the best way to see which parts of the interface work well, and where there's room for improvement.

We formulated the following research questions to guide our usability tests:

- Where are the frustration points for users in the search & application processes?

- How are users using the search functionality?

- How long does it take to get through an application?

- How do users navigate the linear application when they have to move backward to correct mistakes?

- How difficult is it for users to get back to an application they’ve started but abandoned?

Rather than just having the users explore the site, we created a set of tasks that we asked each user to perform. We created the tasks based on our personas, as we were not able to find users that were actually looking for an engineering position on such short notice. We scripted the entire scenario and read it out loud to the participants as they were going through the tasks. We also had the users complete pre- and post-test questionnaires.

Final Report

Our final deliverable was a video that summarized our findings and our recommendations for improvement of Jibe's Candidate Experience Platform. We included clips from the usability testing sessions as well as slides form our milestone presentations to create a narrative that showcases our research approach and the process we followed. The video summarizes our research findings and our suggestions for improvement.